Hey everyone, welcome back to WSQ Time! Today, we’re delving into the inner workings of search engines and how they operate to deliver the information you need. I’m Shivani, and I’m excited to take you through the basics of SEO, starting with the fundamentals of how search engines function.

Understanding Search Engines

Let’s begin with a simple question: what exactly is a search engine? At its core, a search engine is a powerful software program designed to help users find information online using keywords or phrases. You’re probably familiar with the most popular ones like Google, Bing, and Yahoo. These platforms sift through and organize vast amounts of data from billions of web pages, making it accessible and relevant to your queries.

The Components of a Search Engine

When you type in a query into a search engine, it triggers a series of processes that happen behind the scenes:

- Query Engine: This component processes and interprets your search query to understand what information you’re looking for.

- Crawler (or Spider): Think of this as the explorer of the internet. The crawler’s job is to navigate through web pages, reading and collecting information. It starts from a seed URL (like your homepage) and follows links to other pages, systematically indexing what it finds.

- Indexer: Once the crawler gathers information, the indexer organizes it into a structured format for quick retrieval. It’s like creating an organized index in a book, where keywords and their associated web pages are cataloged for future searches.

Crawling: How Information is Gathered

Crawling is where the action happens. Picture your website as a library filled with different types of content—text, images, videos, and more. The crawler visits your site, methodically scanning and storing this information in its database. It ensures that the search engine has a comprehensive view of what your website offers.

If your web pages include links to other sites, the crawler follows these links, visiting those pages and gathering additional information. This process, known as crawling, helps build a map of the interconnected web.

Policies Governing Crawling

Crawling operates under specific guidelines to ensure efficiency and fairness:

- Selection Policy: Determines which pages the crawler should prioritize downloading based on various factors like relevance and authority.

- Revisit Policy: Specifies how often the crawler should revisit web pages to check for updates or changes. This ensures that search results are current and accurate.

- Parallelization Policy: Involves using multiple processes to explore and gather information from links simultaneously, speeding up the crawling process.

- Politeness Policy: Includes crawl delays to prevent overwhelming web servers with too many requests at once, promoting fair access to information.

Indexing: Organizing for Easy Retrieval

Once the crawler collects data, the indexer steps in to organize it effectively. Keywords extracted from web pages are stored in a structured format, much like an index in a book. This indexing process enables the search engine to quickly locate relevant pages when a user enters a search query.

Types of Indexing

There are two primary types of indexing:

- Forward Index: Lists all the keywords present in a document along with their respective locations. This helps in quickly retrieving pages based on specific keywords.

- Backward Index: Lists documents containing specific keywords, aiding in understanding which pages are relevant to particular search queries.

Both types of indexing play a crucial role in how efficiently a search engine delivers search results to users.

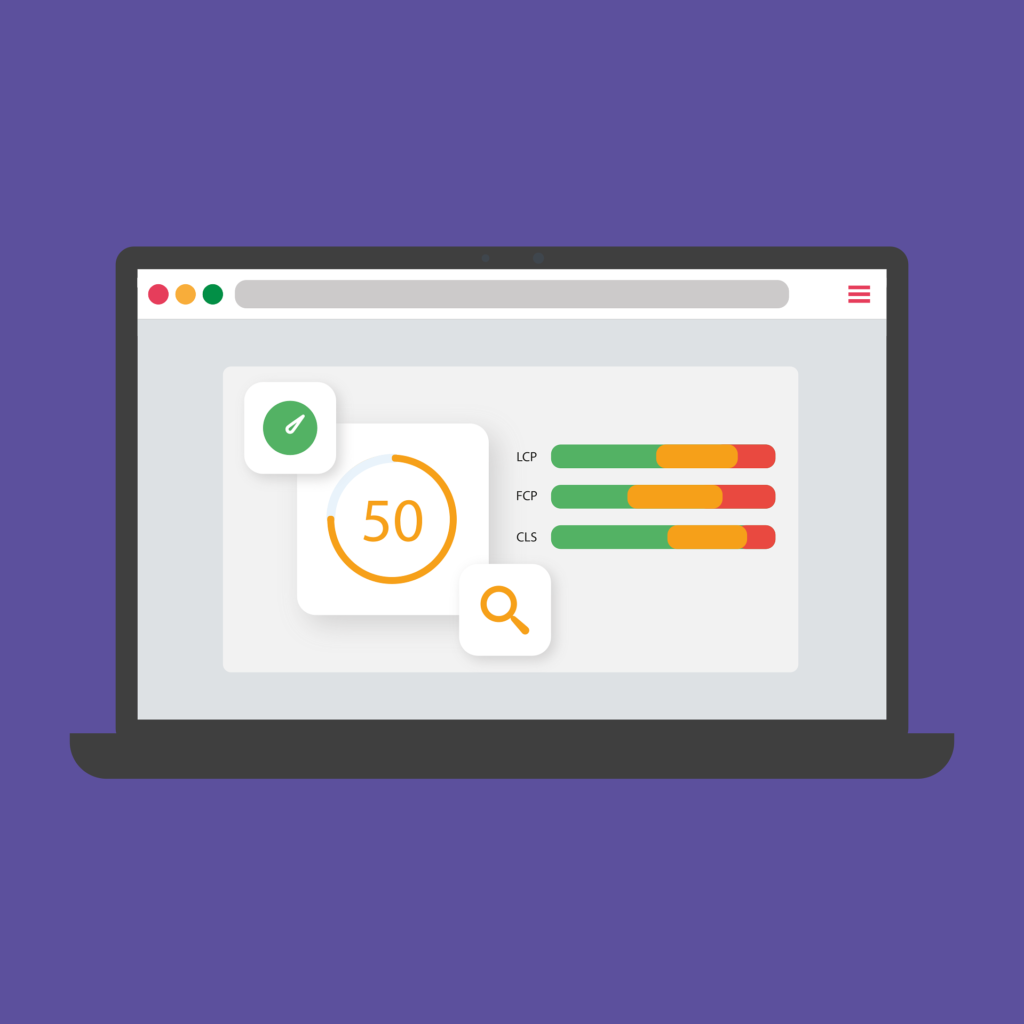

How Search Engines Deliver Results

When you enter a query into a search engine, a ranking algorithm springs into action. It sifts through the indexed database to identify web pages that best match your query. The results are then presented to you in a list format, ranked by relevance and other factors determined by the algorithm.

Understanding Crawl Budget

Ever wondered how often search engines visit your website? That’s where crawl budget comes into play. It refers to the number of times a search engine spider crawls your site within a specified timeframe. Optimizing your crawl budget involves strategies such as:

- Avoiding Heavy Media Files: Large files like videos or complex animations can slow down crawling and indexing processes.

- Optimizing Internal and External Links: Structuring your website with clear, well-connected internal links helps spiders navigate and index your site more effectively. External links to reputable sites can also enhance your site’s authority and relevance.

- Utilizing Social Channels: Engaging with social media platforms not only drives traffic but also signals to search engines that your site is active and valuable.

In today’s discussion, we’ve covered the basics of how search engines work—from crawling and indexing to delivering search results and optimizing your crawl budget. In future articles, we’ll explore more advanced SEO strategies to help your website rank higher and attract more visitors.

Thanks for joining me on this journey through the world of search engines. Stay tuned for more insights and tips on optimizing your online presence. See you next time!